On the debate of GPU #tensor units in #HPC - it looks like we are bound to repeat the history of SIMD units. Those were developed for a very specific use-case (MMX in graphics) and then quickly re-purposed by the HPC community. Vendors reacted fast by adding FP64 support ...

... #HPL @top500supercomp numbers followed and compilers were adapted to "vectorize".

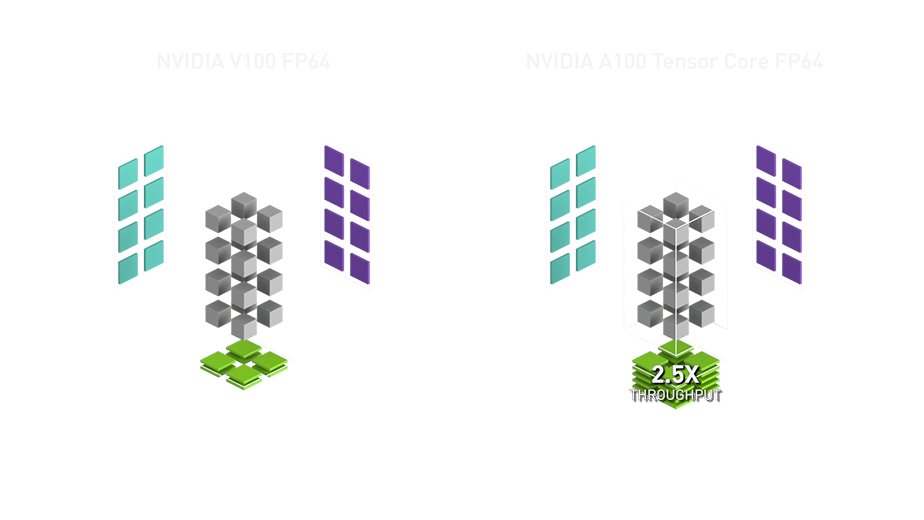

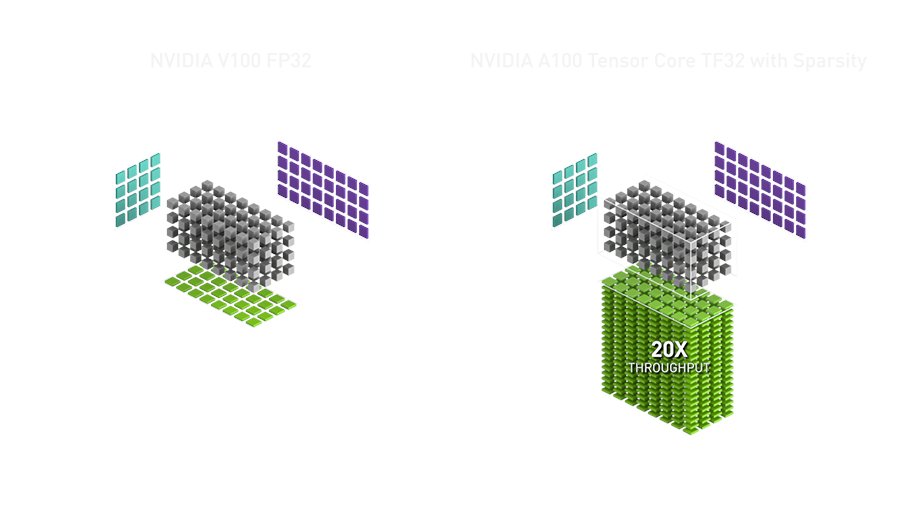

Now, tensor units are introduced in #deeplearning and I don't doubt that those will find their uses in #HPC. Especially if vendors react to specifics of the field, such as sparsity and FP64...

Now, tensor units are introduced in #deeplearning and I don't doubt that those will find their uses in #HPC. Especially if vendors react to specifics of the field, such as sparsity and FP64...

... my worry is more the local optimum we get stuck in. #HPC always had better solutions than SIMD, so called "Cray Vectors" and it took more than a decade to get out of it with #SVE and #RISCV!

For tensor units, we have a more generic version with SSRs as #RISCV extension ...

For tensor units, we have a more generic version with SSRs as #RISCV extension ...

... https://arxiv.org/abs/1911.08356 and also sparse https://arxiv.org/abs/2011.08070 that allows multi-dimensional configurable vectorization for tensor computations (with @LucaBeniniZhFe and the @pulp_platform team).

I am now watching for history to be made by the vendors. There is no panacea!

I am now watching for history to be made by the vendors. There is no panacea!

Read on Twitter

Read on Twitter