Some thoughts after seeing early EH RAPM results...

The highest ever RAPM xG±/60 per their model was Eric Staal's mark of +0.555 in 08-09.

There are 5 players with DOUBLE that mark right now. So, 5 guys are having a year TWICE as good as the previous GOAT play driving season

The highest ever RAPM xG±/60 per their model was Eric Staal's mark of +0.555 in 08-09.

There are 5 players with DOUBLE that mark right now. So, 5 guys are having a year TWICE as good as the previous GOAT play driving season

Why is this? Is this just some weird side effect of the pandemic that caused the standard deviation of NHL talent to suddenly double overnight, making today's best/worst players twice as good/bad as they were yesterday?

No, not at all. It's just a smaller, more unstable sample.

No, not at all. It's just a smaller, more unstable sample.

For those who aren't aware, RAPM is a ridge regression which biases all coefficients towards zero. This is necessary to handle instability.

Evolving Hockey uses a unified Lambda for their RAPM calculations, which means they apply the same degree of shrinkage to each season.

Evolving Hockey uses a unified Lambda for their RAPM calculations, which means they apply the same degree of shrinkage to each season.

I presume this unified Lambda was obtained based on cross validations run on full seasons where data is far more stable and requires a much lesser degree of shrinkage. (Cross validation determines the degree of shrinkage that minimizes the mean-squared error across for the data.)

If you run cross validation on a small sample like the <100 games played this season, you will get a FAR higher lambda value which recommends much higher shrinkage

In hockey terms, the computer says: "The sample is too small to state with much confidence anybody is great/awful"

In hockey terms, the computer says: "The sample is too small to state with much confidence anybody is great/awful"

To give you an idea of this in action:

I used cross validation to obtain the ideal Lambda for even strength xGRAPM for 18-19 (the last 82 game season). The Lambda I received was 0.045.

Then I repeated the process for 2021. The Lambda I received was 0.67. That's 15 times higher!

I used cross validation to obtain the ideal Lambda for even strength xGRAPM for 18-19 (the last 82 game season). The Lambda I received was 0.045.

Then I repeated the process for 2021. The Lambda I received was 0.67. That's 15 times higher!

In other words, the ideal degree of shrinkage within this minuscule sample where teams have faced a maximum of 3 other opponents is FIFTEEN times higher than it is in a normal 82 game season. The distribution of talent didn't change; the stability of the sample just did.

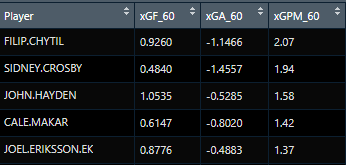

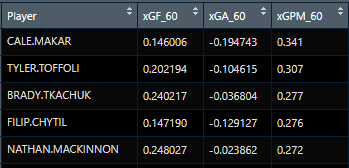

Strictly for illustrative purposes, let's look at this in action. I ran even strength xG RAPM once for 2021 using the same Lambda value that I did for 2018-2019 (left), and once using the Lambda selected for this season through cross validation (right).

Here's how they differ:

Here's how they differ:

As for making my own RAPM data available for this season, it won't be any time soon. My friend @w_finck summarized my feelings well when he said this:

"RAPM shouldn’t be run until there is a large enough sample to reduce Lambda close to what it is in other seasons."

"RAPM shouldn’t be run until there is a large enough sample to reduce Lambda close to what it is in other seasons."

Read on Twitter

Read on Twitter